In March 2022, the Payment Card Industry Security Standards Council unveiled PCI DSS v4.0, a revised version of its Data Security Standard. We previously examined the updates and new requirements of PCI DSS v4.0, but there are two changes in particular that deserve a closer look: the client-side security requirements put forth in sections 6.4.3 and 11.6.1

These sections underscore the importance of client-side web security for businesses engaged in online payments, introducing more stringent regulations governing HTTP headers and payment page scripts. Section 11.6.1, for instance, seeks to counter the potential impact of Magecart attacks by mandating a tamper-detection mechanism. This mechanism would promptly alert organizations about unauthorized alterations to HTTP headers and payment page content as perceived by the consumer’s browser.

Meanwhile, Section 6.4.3 necessitates that organizations uphold the integrity of all payment page scripts executed in the consumer’s browser. This requirement extends to scripts sourced from third-party sites, emphasizing the need for robust controls that ensure the security of all elements interacting with the consumer’s browser, especially within payment transactions.

In this article, we take an in-depth look at the new client-side security requirements PCI DSS section 6.4.3 as well as solutions for addressing these requirements. Stay tuned for a future blog post on section 11.6.1.

But first, let’s take a closer look at client-side threats the challenges they present to businesses, and why the PCI council made these changes.

Table of Contents

To understand client-side security, we must first understand the two fundamental components of a web application: The server side and the client side.

The server side is the part of a web application that runs on the server where the website or application is hosted. It handles all of the back-end functionalities of an application, including data processing, storage, and management, as well as business logic and interactions with databases.

The client side is the part of a web application that runs directly within a user’s web browser, rendering everything a user sees and interacts with on a website or web application. Because client-side code executes on the user’s device, it enables dynamic content updates without requiring full page reloads, leading to faster interactions and smoother interfaces. However, placing this code on the client side–outside of the control of your organization– also creates some inherent risk for potential attacks such as code injection, cross-site scripting (XSS) , and data manipulation, making it a prime target for cybercriminals seeking to exploit user interactions and steal sensitive information. And, when third-party libraries are involved, the risk is even greater.

Client-side security is about ensuring the safety and proper behavior of the components within a web application that run in a visitor’s web browser. In simple terms, it’s making sure everything works as intended and that sensitive data remains secure. This is, of course, easier said than done.

Challenges with client-side security include lack of runtime visibility, frequent script changes, vulnerabilities introduced by third-party vendors, and insufficient security reviews due to fast-paced development.

And these are exactly the sort of challenges that are addressed with the requirements of PCI DSS v4.0.

One of the most significant updates to PCI DSS v4.0 are the changes made to Section 6.4 of the standard, which focuses on the protection of public-facing web applications. The requirements and best practices under this section have been updated with a new focus on securing the front end of web applications from client-side attacks, particularly on securing third-party scripts and mitigating potential malicious scripts.

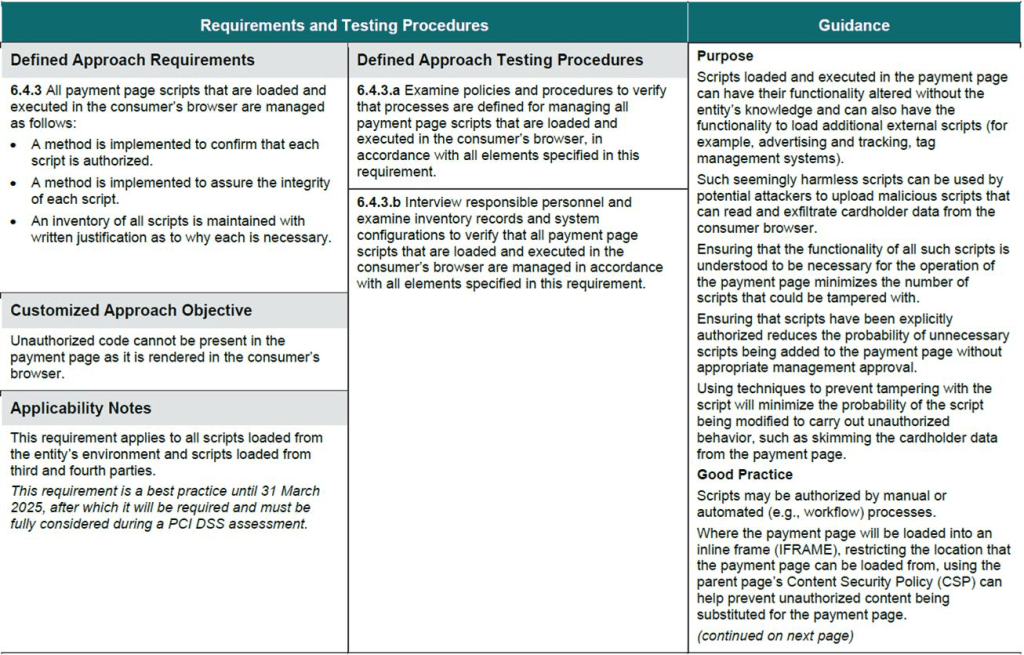

In section 6.4.3, the standard acknowledges the threat posed by client-side scripts, noting that “scripts loaded and executed on the payment page can have their functionality altered without the entity’s knowledge, and can also have external scripts.”

To mitigate these potential threats section 6.4.3. Introduces three new PCI DSS compliance requirements on how scripts on payment pages should be managed:

In practical terms, these obligations involve cataloging all code on payment pages, justifying their necessity, and ensuring authorized code remains unchanged. Let’s take a closer look.

These requirements are currently considered best practices, but they will become mandatory as of March 31, 2025, at which point they will be considered in all PCI DSS assessments.

The first requirement essentially demands that any script which fires on the payment page must have prior authorization. This may seem straightforward, but given the complexity of modern web pages, it can be a difficult task.

The recommendation put forth by the PCI DSS is to authorize scripts via a workflow process or to utilize a content security policy (CSP) to prevent unauthorized content from being substituted on the payment page. This solution is good enough for small organizations with a limited number of scripts running on their website, but manual processes and even automated workflow processes can become a burden at scale.

The second requirement demands verifying the “integrity” of each script. In simple terms, that means ensuring code remains unchanged since being verified as non-malicious. Again, a seemingly simple requirement that creates a complicated task.

The issue is, client-side code–especially third-party code–is dynamic . Vendors providing functionality like ads and tracking, customer support widgets, chatbots, etc., frequently update their code with security patches, new functionality, or bug fixes–and any fourth-party libraries that their scripts rely on are apt to do the same.

And first-party code may run into the same issues when based on open-source libraries.

What’s more, client-side code is often designed to change as the webpage loads to respond to identifiers and provide a more customized experience.

In light of these challenges, a manual process to verify script integrity would be a full-time job. Because client-side code is not static, a solution of static lists and integrity checks will not hold up.

Requirement 3 of PCI DSS v4.0 Section 6.4.3 involves maintaining an inventory of all scripts running on payment pages and providing written justification for the necessity of each script. This ensures that only authorized scripts are executed and that there’s a clear understanding of why each script is essential for the functionality of the payment page.

In essence, this requirement aims to enhance security by promoting transparency and control over the scripts that interact with sensitive payment data. It prevents the inclusion of unnecessary or potentially risky scripts that could compromise the security of the payment process. By documenting and justifying the presence of each script, organizations can better manage their web application’s attack surface and reduce the potential for unauthorized or malicious code to be executed.

This requirement demands that organizations maintain a list of all scripts executed within the browser, as well as written reasoning about the necessity of each individual script. By justifying individual scripts, organizations are able to ensure that all scripts running are identified and used only for their intended purpose.

Given the fact that a major online retailer might have dozens of integrations and countless unique scripts, including secondary dependencies that frequently change within the browser, the task of documenting justifications for each script can be very difficult. Even with a committed team, the effort needed to provide justifications for each individual script could extend over several months.

Complying with these new regulations is feasible with legacy technologies, including some mentioned in the regulation itself, like content security policy (CSP) and subresource integrity (SRI) However, for complex environments, these tools could require a significant investment of time and skills. Let’s look at how these tools can be leveraged to meet these requirements, and where they fall short.

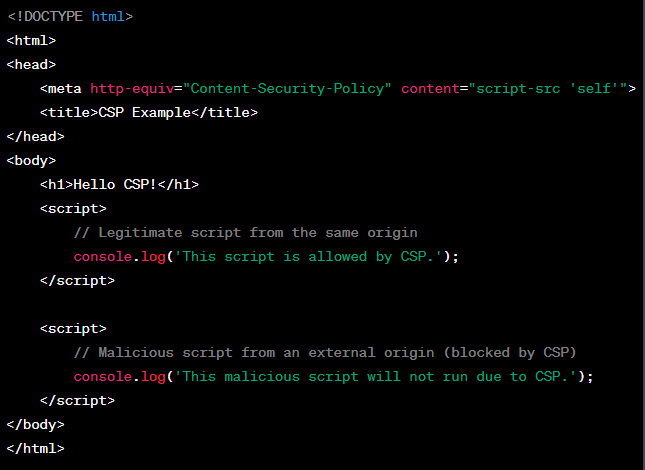

Content Security Policy (CSP) is a security standard that lets website administrators identify the sources of content that are allowed to be loaded on their website. Essentially, CSP acts as an in-browser firewall that controls the domains that can load content on your side, be it scripts, images, and other resources. CSP is configured by adding a special HTTP header to the web server’s responses. This header provides instructions to the web browser about which sources of content are considered safe and should be allowed to execute on the web page. This can help mitigate client-side threats like cross-site scripting (XSS) attacks, but it also creates the tedious manual process of managing the allowlist.

In the above example, the tag with the Content-Security-Policy header specifies that only scripts from the same origin (‘self’) are allowed to run. As a result, the legitimate script within the same origin will execute and log a message to the console, while the malicious script from an external origin will be blocked by the CSP and will not execute.

Implementing a Content Security Policy (CSP) can enhance security by restricting script sources, but relying solely on CSP to meet the requirements of PCI DSS v4.0 Section 6.4.3 presents several significant limitations.

For starters, while CSP addresses script origin and loading, it doesn’t cover the comprehensive scope of this PCI requirement.

CSP authorizes scripts based on domain, not on code. And as discussed above, the dynamic behavior of scripts means that a script that appears benign, even coming from a trusted domain, can be compromised and changed to execute malicious code. A CSP has no visibility, or control over, the behavior of scripts.

And maintaining a CSP can be labor intensive. The complexity of crafting and maintaining an effective CSP grows with application size, and an overly strict CSP might generate excessive false alerts, overwhelming security teams. Furthermore, CSP’s impact on functionality can create a trade-off between security and business agility, as changes are typically pushed through the IT group, which can cause heavy workloads and internal bottlenecks..

Beyond these challenges, the historical context of script behavior and maintaining integrity over time—both crucial for PCI compliance—fall outside the scope of CSP. To address the requirements of PCI DSS v4.0 Section 6.4.3, a more comprehensive approach that combines CSP with other strategies is essential.

Another tool that can be used to partially meet the requirements of PCI 6.4.3 is subresource integrity (SRI),a security feature in web browsers that helps ensure the integrity of resources (such as scripts, stylesheets, and fonts) loaded from external sources.

SRI works by allowing you to include a hash value in your HTML code alongside the URL of the resource. When the browser loads the resource, it calculates its hash and compares it to the provided hash. If they match, the resource is considered valid and hasn’t been tampered with.

In the above example, the integrity attribute is set with the hash value (sha256-K6pWzU1+qff4LgKKnPG4fB6scsB2/pdxr3i8C9eMX00=) of the external script. The hash value is calculated using a cryptographic hash function (such as SHA-256) and is unique to the content of the script file. If the content of the script ever changes, the hash value will also change, and the browser will consider the script to be compromised.

The crossorigin=”anonymous” attribute is also included to ensure that the browser fetches the script without sending any credentials (cookies or HTTP authentication) along with the request.

This hashing functionality means that SRI can be used to meet the requirements to confirm authorization and verify the integrity of client-side scripts that are loaded from external sources (although at considerable labor cost), but it cannot provide an inventory or justification for scripts.

The primary drawback of SRI is that it is a bear to implement and maintain, particularly in scenarios where scripts frequently change or update.

When a website incorporates external scripts, such as those from third-party vendors, these scripts may frequently change or update. Each time a script is modified, a new hash value needs to be generated to reflect the updated content. This new hash is then added to the SRI hash list, which the browser uses to verify the integrity of the script when loading it.

This process might seem straightforward for a single script, but consider the complexity of modern websites that often rely on numerous scripts from various sources. Websites can easily have dozens or even hundreds of scripts, and these scripts can change frequently—sometimes thousands of times a year due to updates, bug fixes, or feature enhancements by the script providers.

Managing this dynamic environment becomes a challenge. The website operator or security team must consistently track script changes, create new hash values, and update the SRI hash list accordingly. Any lapses or errors in this process can result in mismatched hashes, which can lead to browsers refusing to load scripts, causing functional issues, or even breaking parts of the website.

Moreover, the need for timely updates amplifies the operational workload. Failing to update the hash list in sync with script changes can inadvertently create security gaps or disrupt the user experience. This constant vigilance and effort to maintain SRI can strain resources and divert attention from other critical security tasks.

Content Marketing Manager

Jeff is the resident content marketing expert at CHEQ. He has several years of experience as a trained journalist, and more recently in his career found a knack for communicating complex cybersecurity topics in an approachable yet detailed manner.